With the current hype in AI it has become quite hard to avoid writing python and shipping it at scale. Unfortunately, the python packaging and environment system is so notoriously convoluted that there is even an infamous xkcd comic about it.

Enter uv, the rising star of the python community that has succeeded in solving all these problems while also being significantly more performant. But while uv is great at managing your python environment it does not yet have a clear answer for how to bundle and ship them.

So, in this post I will show you how to build a python project with a monorepo structure and how to use uv and pex to build and distribute it.

You can find the code for this blog post here.

Why not X?

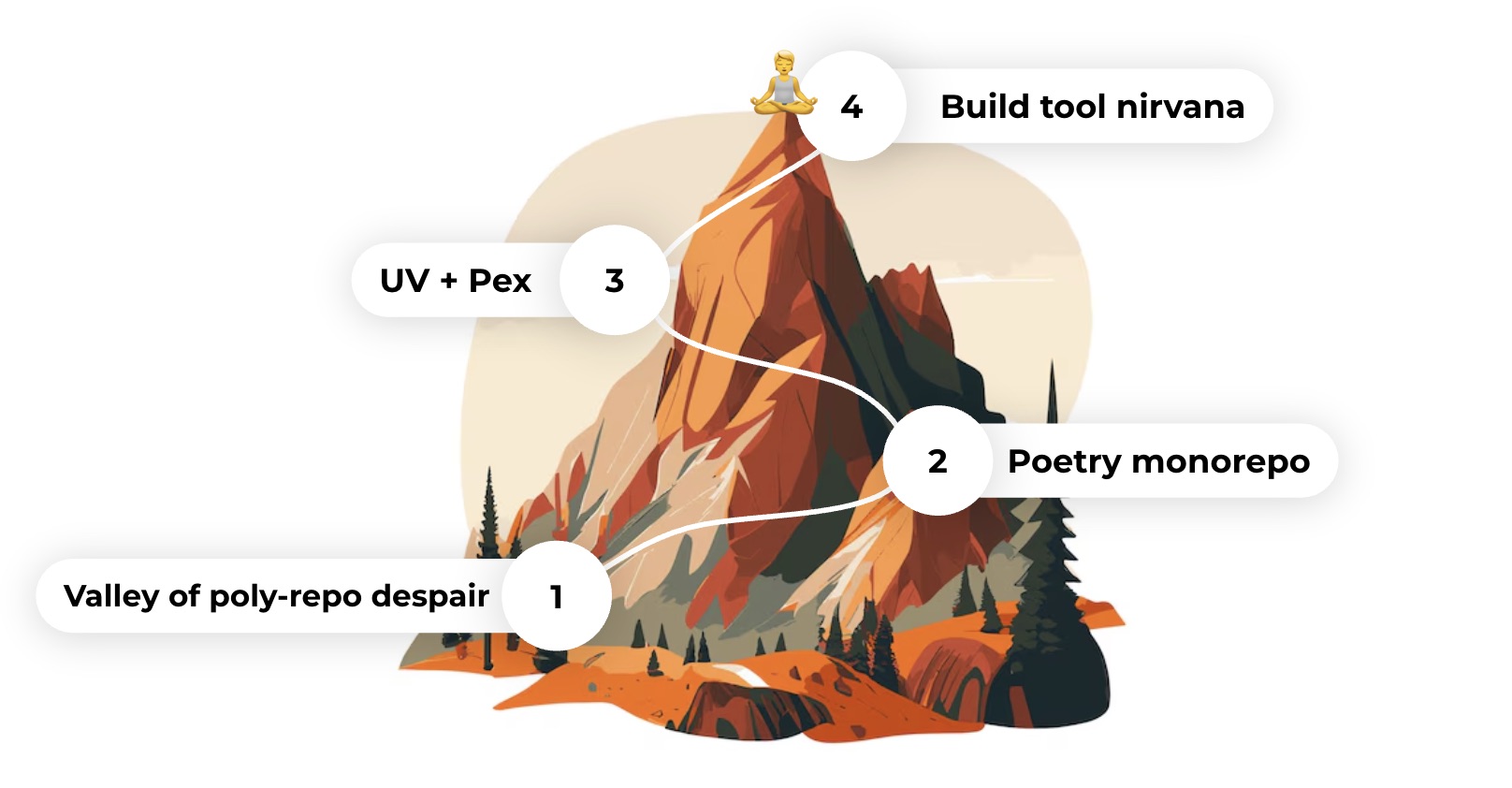

When you get started with python mono-repositories there used to be two ways of approaching the problem:

- Start with a “proper” mono repository build tool, i.e. Pants or Bazel.

- Start with “regular” python tooling such as Poetry.

At Visia, we tried suffered through both approaches, so let me briefly outline their shortcomings.

Build tools

Having had extensive experience with Bazel at my previous job to the point of contributing open-source build rules I felt obliged to give it a try. However, during my research I discovered Pants, a build tool that promises the same things that Bazel does while being much more ergonomic and easier to adopt. My favourite part about Pants is that it allows you to write your own build rules in typed python which is a massive game changer compared to Bazel’s starlark.

The problem was just that, like with Bazel, adoption was extremely difficult for the rest of the team and also hard to justify. We had only just moved to a mono repository and had less than a dozen build targets so it was hard to see the value behind fighting a myriad of platform and dependency errors.

Regular python tooling

Our next approach was centered around using poetry and earthly for managing dependencies between projects. This approach got us quite far, as we were for the first time able to share code reliably, and build docker images that only contained the dependencies that were needed.

However, as our code base grew we started to feel the pain of this approach:

- poetry (in docker) installs are very slow especially compared to uv

- no global lock file which means:

- changing the dependencies of one

pyproject.tomlrequires re-locking dependant projects. - more integration effort for dependencies.

- changing the dependencies of one

- No global python environment

- This makes it harder to use tools like

pyright.

- This makes it harder to use tools like

The solution

At first, I thought that we would have to go directly to Pants until I discovered that you can run uv pip compile to

create a requirements.txt per workspace.

This means that we can get an isolated dependency set for every single deployment target in our repository.

At this point, we can already imagine a setup where we copy all the necessary components into our final Docker container

and then install only this dependency set.

However, copying around directories is an awkward way of managing dependencies, even though this is precisely how we

used to do it with our Poetry setup using Earthly’s IMPORT command.

Instead, we would optimally want to do it the way one is used to from Go or Java: build a static binary or fat jar that

includes everything needed to run the program and just copy that entire file into the Docker container.

I remembered that Pants used a tool called pex for achieving this goal by turning

all of your code into a zipapp.

Creating the app from within the workspace is as simple as running:

uvx pex \

-r dist/requirements.txt \

-o dist/bin.pex \

-e main \

--python-shebang '#!/usr/bin/env python3' \

--no-transitive \

--sources-dir=. \

# (optional) Package a full python distribution with the executable

--scie eager \

--scie-pbs-stripped

The resulting binary can then be run anywhere on your machine! But unfortunately this isn’t quite enough. If you run this command on your MacOS it will produce an executable that will not run when you copy it into any linux docker container. Likewise, you may want to generate arm binaries from a linux x86 CI server.

A note on cross-compiling

To solve this problem, we have two options, both of which are implemented in the recipe repository:

- Build pex files on CI machines that match the target OS and architecture (see

.github/workflows/release.yaml). - Use a Docker container on your machine to run the

pexcommand.

The latter is implemented in ./scripts/build_pex_in_docker.sh, but it is a bit cumbersome.

First, we need to mount the entire local repository, which can always lead to funky behavior if you don’t watch

non-tracked folders such as virtual environments.

Second, the requirements.txt file we compile in the first step outputs absolute file:// paths for local

dependencies. We can fix this with a simple sed command.

Conclusion

And there you have it: A lightweight and extensible approach to bundling your apps for production while benefiting from the most advanced Python environment manager. However, I should note that we are still missing the most important qualities of a monorepo build tool, most notably caching.

The good news is that with the flow presented here, you are effectively baby-stepping your way towards adopting Pants. Pants also uses a single lock file and relies on pex files that can be copied into Docker images. So consider this training wheels for your eventual Pants adoption journey.

Addenda

Here are some miscellaneous sharp edges that we ran into while implementing this outside of this toy example:

- Even if you specify the requirements with

-rpex will try to use thepyproject.tomlto discover dependencies. Depending on the deps this will fail since it will try topip installthe workspace dependencies. We fixed this by first copying the package’s source files to thedistdirectory and by setting--no-transitiveto prevent pex from trying to use thepyprojectfiles of our dependencies. - For very large pex files (>2 Gb) python <3.13 will fail to load the zip file so we had to use

--layout=packed(discussion)